The Hyper-Scale Engine: How NVIDIA Run:ai and Dynamo Conquer LLM Latency with Smart Multi-Node Scheduling

H2: The Scaling Challenge in Generative AI: From Single GPU to Multi-Node Complexity

For early-stage founders building next-generation Generative AI and B2B SaaS growth strategies—where every millisecond of inference latency impacts user experience and bottom-line cost—the challenge of scaling Large Language Models (LLMs) is formidable. Modern LLMs like Llama and DeepSeek are massive, often requiring distributed deployment across multiple GPUs and even multiple server nodes.

This multi-node complexity introduces three core problems for startup funding India and global tech ventures:

- Orchestration Failure: Distributed LLM inference workloads (like those served by NVIDIA Dynamo) are composed of tightly coupled components—routers, prefill workers, and decode workers. If one component fails to launch or is placed on a distant node, the entire service stalls.

- Communication Latency: Components must communicate constantly, transferring the massive Key-Value (KV) cache. If these interdependent components are placed on physically distant servers or racks, cross-node communication latency explodes, crippling throughput.

- Resource Inefficiency: Traditional schedulers lack “LLM-awareness.” They might deploy only part of a workload, leaving expensive GPU resources fragmented and underutilized—a critical waste for startups operating on a tight runway.

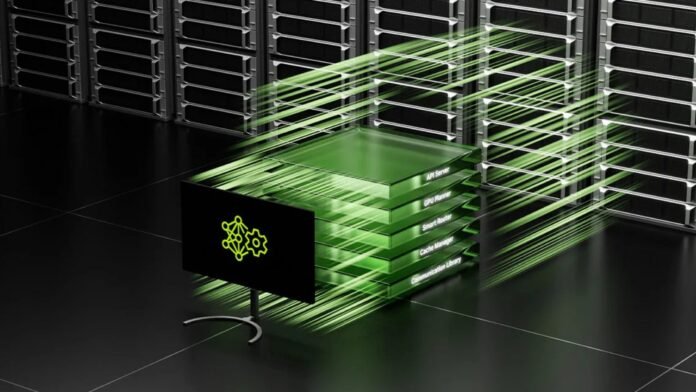

The integration of the NVIDIA Run:ai platform (a leading AI orchestration layer acquired by NVIDIA) with the NVIDIA Dynamo open-source inference framework is the breakthrough that solves this challenge through Smart Multi-Node Scheduling. This is a masterclass in deep tech innovation and intelligent resource management.

H2: Dynamo’s Disaggregation: The Foundation for Hyper-Efficiency

Before scheduling, the NVIDIA Dynamo framework itself provides key architectural innovations that enable high throughput:

H3: Disaggregated Prefill and Decode Inference

Dynamo pioneered the concept of disaggregated serving. LLM inference has two distinct phases with different hardware needs:

- Prefill Phase (Prompt Processing): Compute-bound and benefits from massive parallel processing.

- Decode Phase (Token Generation): Memory-bandwidth-bound and latency-sensitive.

Dynamo separates these phases onto different, specialized GPU resources, allowing each to be scaled and optimized independently. This flexible architecture is the only way to maximize GPU utilization while maintaining low Time-to-First-Token (TTFT), a crucial user-experience metric.

H3: LLM-Aware Request Routing

Dynamo’s Smart Router is highly intelligent. It tracks the status of the KV cache across the entire fleet of distributed GPUs. This allows it to:

- Minimize Recomputation: If a new request shares an identical prefix (e.g., a long system prompt in an agentic workflow or a prior chat history), the router directs the request to a worker that already has the necessary KV cache stored.

- Dynamic Scheduling: It continuously monitors GPU capacity and prefill activity, dynamically allocating resources between the prefill and decode stages based on real-time traffic, ensuring the most efficient use of resources.

H2: The Run:ai Integration: Orchestration that Guarantees Performance

The true power of Smart Multi-Node Scheduling emerges when NVIDIA Run:ai’s orchestration capabilities are applied to Dynamo’s architecture. Run:ai brings two essential, non-negotiable scheduling principles:

1. Gang Scheduling: The All-or-Nothing Guarantee

Dynamo workloads are gang-scheduled by Run:ai. This means the entire group of tightly coupled components (prefill workers, decode workers, routers) is treated as a single, atomic unit.

- Success: If the entire gang can be placed and launched simultaneously, it proceeds.

- Failure: If even one component cannot be placed, the entire group waits.

For aspiring social entrepreneurs and AI product managers, this eliminates the risk of partial deployments, preventing scenarios where a GPU is tied up with an incomplete workload that can’t actually serve traffic. It ensures efficient GPU allocation and predictable service availability.

2. Topology-Aware Placement: Minimizing Cross-Node Latency

This is the secret sauce for speed. Topology-aware scheduling is the strategic placement of related components to minimize the physical distance data must travel.

- Admin Defined Topology: Cluster administrators define the network topology (which GPUs are in which node, which nodes are in which rack, and which racks are in which zone).

- Scheduler Optimization: The Run:ai scheduler uses this map to apply a “preferred” soft constraint, attempting to co-locate the required Dynamo components (e.g., a prefill worker and its corresponding decode worker) at the closest possible tier (e.g., the same node, then the same rack, then the same zone).

By prioritizing proximity, the scheduler drastically cuts the latency required for the high-volume KV cache transfer between components, ensuring that the theoretical performance gains of Dynamo translate into real-world, low-latency inference.

H2: Key Takeaways for Visionary Founders (Leadership in Adversity)

The Run:ai and Dynamo integration offers vital insights for any founder navigating the complexities of scaling AI infrastructure and leadership in adversity:

- Orchestration is Performance: The performance of an LLM is no longer just about the GPU hardware; it’s about the intelligence of the orchestration layer that manages resource assignment. Investing in smart scheduling tools is as critical as investing in compute.

- Design for Atomicity: Embrace the “gang scheduling” mindset. Complex, interdependent systems must be treated as atomic units. If your core business process requires multiple services to work together, ensure they either all succeed or all fail together to maintain system integrity and avoid stranded resources.

- Context is King: The power of Dynamo lies in its contextual intelligence (LLM-awareness and KV cache routing). In business, this means avoiding unnecessary work: always look for ways to reuse context, cache prior work, and route demands to the resources best equipped to handle them, reducing computational waste and maximizing speed.

The era of simple round-robin load balancing for LLMs is over. By adopting the disaggregated architecture of Dynamo and the intelligent, guaranteed scheduling of Run:ai, founders can build the highly performant, cost-effective, and massively scalable Generative AI services that define the future of the tech community.

Are you a startup founder or innovator with a story to tell? We want to hear from you! Submit Your Startup to be featured on Taalk.com.